Nextflow

¶

¶

Nextflow is the most widely used workflow manager in bioinformatics.

This guide shows how to register a nf-core/scrnaseq Nextflow run using the nf-lamin plugin.

See Post-run script learn how to register a Nextflow run using a post-run script.

To use the nf-lamin plugin, you need to configure it with your LaminDB instance and API key.

This setup allows the plugin to authenticate and interact with your LaminDB instance, enabling it to record workflow runs and associated metadata.

Set API Key¶

Retrieve your Lamin API key from your Lamin Hub account settings and set it as a Nextflow secret:

nextflow secrets set LAMIN_API_KEY <your-lamin-api-key>

Configure the plugin¶

Add the following block to your nextflow.config:

plugins {

id 'nf-lamin'

}

lamin {

instance = "<your-lamin-org>/<your-lamin-instance>"

api_key = secrets.LAMIN_API_KEY

}

See nf-lamin plugin reference for more configuration options.

Example Run with nf-core/scrnaseq¶

This guide shows how to register a Nextflow run with inputs & outputs for the nf-core/scrnaseq pipeline.

Run the pipeline¶

With the nf-lamin plugin configured, let’s run the nf-core/scrnaseq pipeline on remote input data.

# The test profile uses publicly available test data

!nextflow run nf-core/scrnaseq \

-r "4.0.0" \

-profile docker,test \

-plugins nf-lamin \

--outdir s3://lamindb-ci/nf-lamin/run_$(date +%Y%m%d_%H%M%S)

Show code cell output

N E X T F L O W ~ version 25.10.4

Pulling nf-core/scrnaseq ...

downloaded from https://github.com/nf-core/scrnaseq.git

Downloading plugin [email protected]

Launching `https://github.com/nf-core/scrnaseq` [voluminous_jepsen] DSL2 - revision: e0ddddbff9 [4.0.0]

Downloading plugin [email protected]

Downloading plugin [email protected]

------------------------------------------------------

,--./,-.

___ __ __ __ ___ /,-._.--~'

|\ | |__ __ / ` / \ |__) |__ } {

| \| | \__, \__/ | \ |___ \`-._,-`-,

`._,._,'

nf-core/scrnaseq 4.0.0

------------------------------------------------------

Input/output options

input : https://github.com/nf-core/test-datasets/raw/scrnaseq/samplesheet-2-0.csv

outdir : s3://lamindb-ci/nf-lamin/run_20260224_145133

Mandatory arguments

aligner : star

protocol : 10XV2

Skip Tools

skip_cellbender : true

Reference genome options

fasta : https://github.com/nf-core/test-datasets/raw/scrnaseq/reference/GRCm38.p6.genome.chr19.fa

gtf : https://github.com/nf-core/test-datasets/raw/scrnaseq/reference/gencode.vM19.annotation.chr19.gtf

save_align_intermeds : true

Institutional config options

config_profile_name : Test profile

config_profile_description: Minimal test dataset to check pipeline function

Generic options

trace_report_suffix : 2026-02-24_14-51-47

Core Nextflow options

revision : 4.0.0

runName : voluminous_jepsen

containerEngine : docker

launchDir : /home/runner/work/nf-lamin/nf-lamin/docs

workDir : /home/runner/work/nf-lamin/nf-lamin/docs/work

projectDir : /home/runner/.nextflow/assets/nf-core/scrnaseq

userName : runner

profile : docker,test

configFiles : /home/runner/.nextflow/assets/nf-core/scrnaseq/nextflow.config, /home/runner/work/nf-lamin/nf-lamin/docs/nextflow.config

!! Only displaying parameters that differ from the pipeline defaults !!

------------------------------------------------------

* The pipeline

https://doi.org/10.5281/zenodo.3568187

* The nf-core framework

https://doi.org/10.1038/s41587-020-0439-x

* Software dependencies

https://github.com/nf-core/scrnaseq/blob/master/CITATIONS.md

WARN: The following invalid input values have been detected:

* --validationSchemaIgnoreParams: genomes

[d7/2624c5] Submitted process > NFCORE_SCRNASEQ:SCRNASEQ:FASTQC_CHECK:FASTQC (Sample_X)

[59/6eb274] Submitted process > NFCORE_SCRNASEQ:SCRNASEQ:FASTQC_CHECK:FASTQC (Sample_Y)

[4c/769d96] Submitted process > NFCORE_SCRNASEQ:SCRNASEQ:GTF_GENE_FILTER (GRCm38.p6.genome.chr19.fa)

[bc/1dd001] Submitted process > NFCORE_SCRNASEQ:SCRNASEQ:STARSOLO:STAR_GENOMEGENERATE (GRCm38.p6.genome.chr19.fa)

[ec/1108fe] Submitted process > NFCORE_SCRNASEQ:SCRNASEQ:STARSOLO:STAR_ALIGN (Sample_X)

[21/a18218] Submitted process > NFCORE_SCRNASEQ:SCRNASEQ:STARSOLO:STAR_ALIGN (Sample_Y)

[eb/ee3500] Submitted process > NFCORE_SCRNASEQ:SCRNASEQ:MTX_TO_H5AD (Sample_Y)

[e6/21a169] Submitted process > NFCORE_SCRNASEQ:SCRNASEQ:MTX_TO_H5AD (Sample_X)

[f4/ebf8ca] Submitted process > NFCORE_SCRNASEQ:SCRNASEQ:MTX_TO_H5AD (Sample_X)

[f3/4ef87e] Submitted process > NFCORE_SCRNASEQ:SCRNASEQ:MTX_TO_H5AD (Sample_Y)

[97/caf317] Submitted process > NFCORE_SCRNASEQ:SCRNASEQ:H5AD_CONVERSION:ANNDATAR_CONVERT (Sample_Y)

[c7/22d0d4] Submitted process > NFCORE_SCRNASEQ:SCRNASEQ:MULTIQC

[0b/def1b2] Submitted process > NFCORE_SCRNASEQ:SCRNASEQ:H5AD_CONVERSION:ANNDATAR_CONVERT (Sample_X)

[f0/b6f660] Submitted process > NFCORE_SCRNASEQ:SCRNASEQ:H5AD_CONVERSION:ANNDATAR_CONVERT (Sample_X)

[d1/2329ad] Submitted process > NFCORE_SCRNASEQ:SCRNASEQ:H5AD_CONVERSION:ANNDATAR_CONVERT (Sample_Y)

[f2/e9ec41] Submitted process > NFCORE_SCRNASEQ:SCRNASEQ:H5AD_CONVERSION:CONCAT_H5AD (combined)

[40/5109e2] Submitted process > NFCORE_SCRNASEQ:SCRNASEQ:H5AD_CONVERSION:CONCAT_H5AD (combined)

[b9/21204d] Submitted process > NFCORE_SCRNASEQ:SCRNASEQ:H5AD_CONVERSION:ANNDATAR_CONVERT (combined)

[63/5aa18b] Submitted process > NFCORE_SCRNASEQ:SCRNASEQ:H5AD_CONVERSION:ANNDATAR_CONVERT (combined)

-[nf-core/scrnaseq] Pipeline completed successfully-

What is the full command and output when running this command?

nextflow run nf-core/scrnaseq \

-r "4.0.0" \

-profile docker \

-plugins nf-lamin \

--input https://github.com/nf-core/test-datasets/raw/scrnaseq/samplesheet-2-0.csv \

--fasta https://github.com/nf-core/test-datasets/raw/scrnaseq/reference/GRCm38.p6.genome.chr19.fa \

--gtf https://github.com/nf-core/test-datasets/raw/scrnaseq/reference/gencode.vM19.annotation.chr19.gtf \

--protocol 10XV2 \

--aligner star \

--skip_cellbender \

--outdir s3://lamindb-ci/nf-lamin/run_$(date +%Y%m%d_%H%M%S)

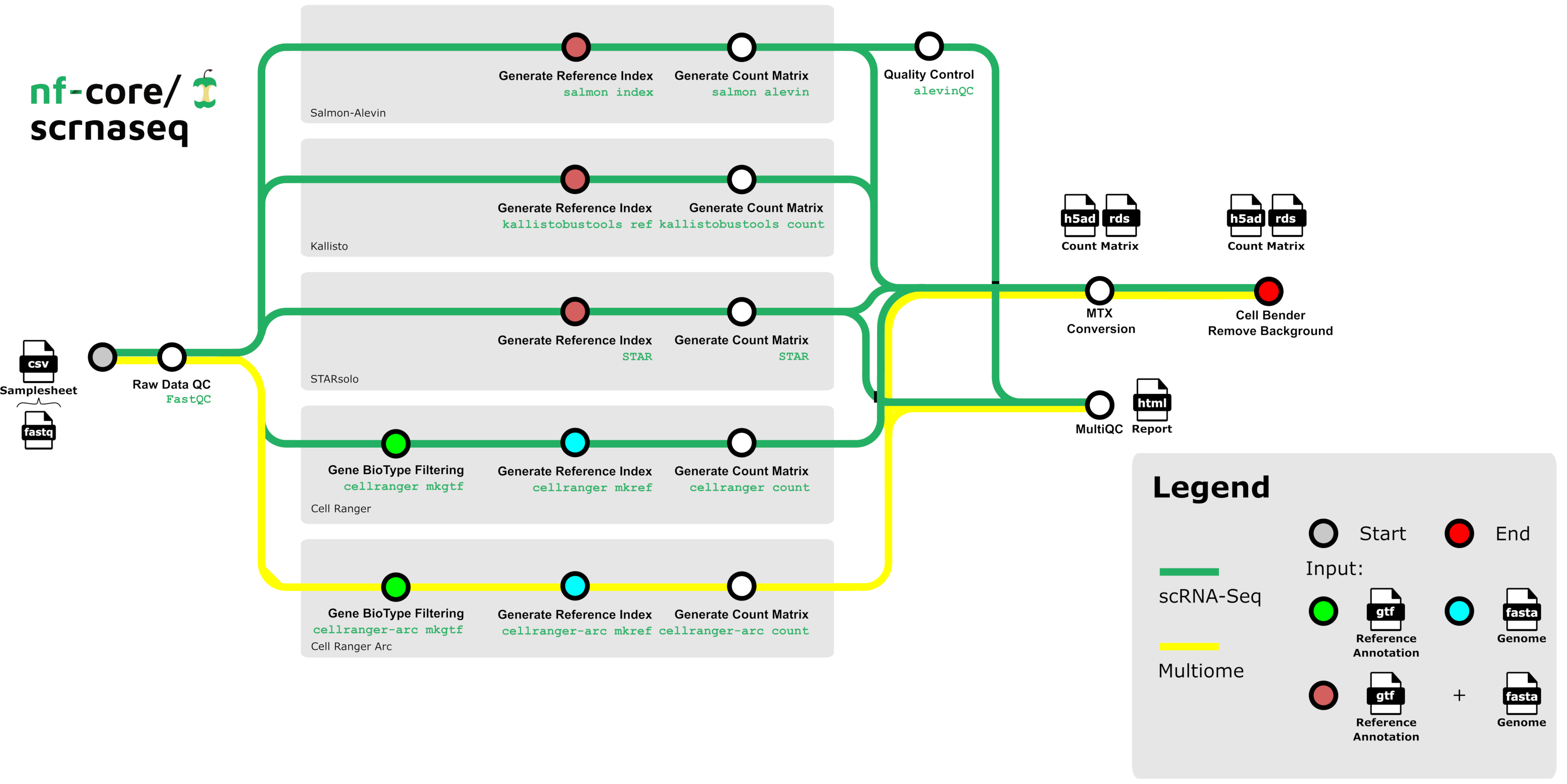

What steps are executed by the nf-core/scrnaseq pipeline?

When you run this command, nf-lamin will print links to the Transform and Run records it creates in Lamin Hub:

✅ Connected to LaminDB instance 'laminlabs/lamindata' as 'user_name'

Transform J49HdErpEFrs0000 (https://staging.laminhub.com/laminlabs/lamindata/transform/J49HdErpEFrs0000)

Run p8npJ8JxIYazW4EkIl8d (https://staging.laminhub.com/laminlabs/lamindata/transform/J49HdErpEFrs0000/p8npJ8JxIYazW4EkIl8d)

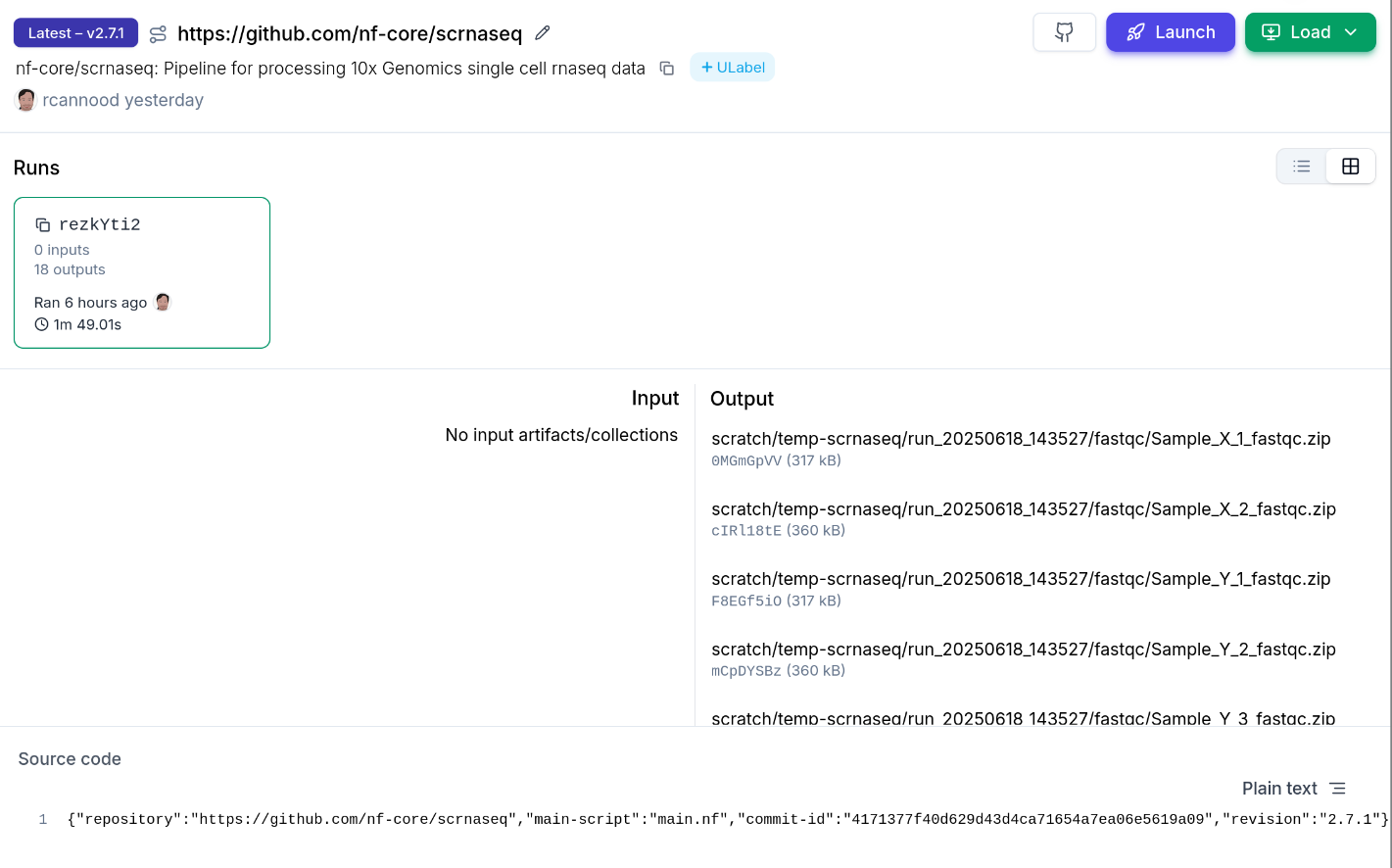

View transforms & runs on Lamin Hub¶

You can explore the run and its associated artifacts through Lamin Hub or the Python package.

Via Lamin Hub¶

Transform: J49HdErpEFrs0000

Run: p8npJ8JxIYazW4EkIl8d

Using LaminDB¶

import lamindb as ln

# Make sure you are connected to the same instance

# you configured in nextflow.config

ln.Run.get("p8npJ8JxIYazW4EkIl8d")

This will display the details of the run record in your notebook:

Run(uid='p8npJ8JxIYazW4EkIl8d', name='trusting_brazil', started_at=2025-06-18 12:35:30 UTC, finished_at=2025-06-18 12:37:19 UTC, transform_id='aBcDeFg', created_by_id=..., created_at=...)